Info

Supervisor: Prof. Dominik Schumacher

Software: VVVV Gamma

Python Runtime: Tebjan Halm

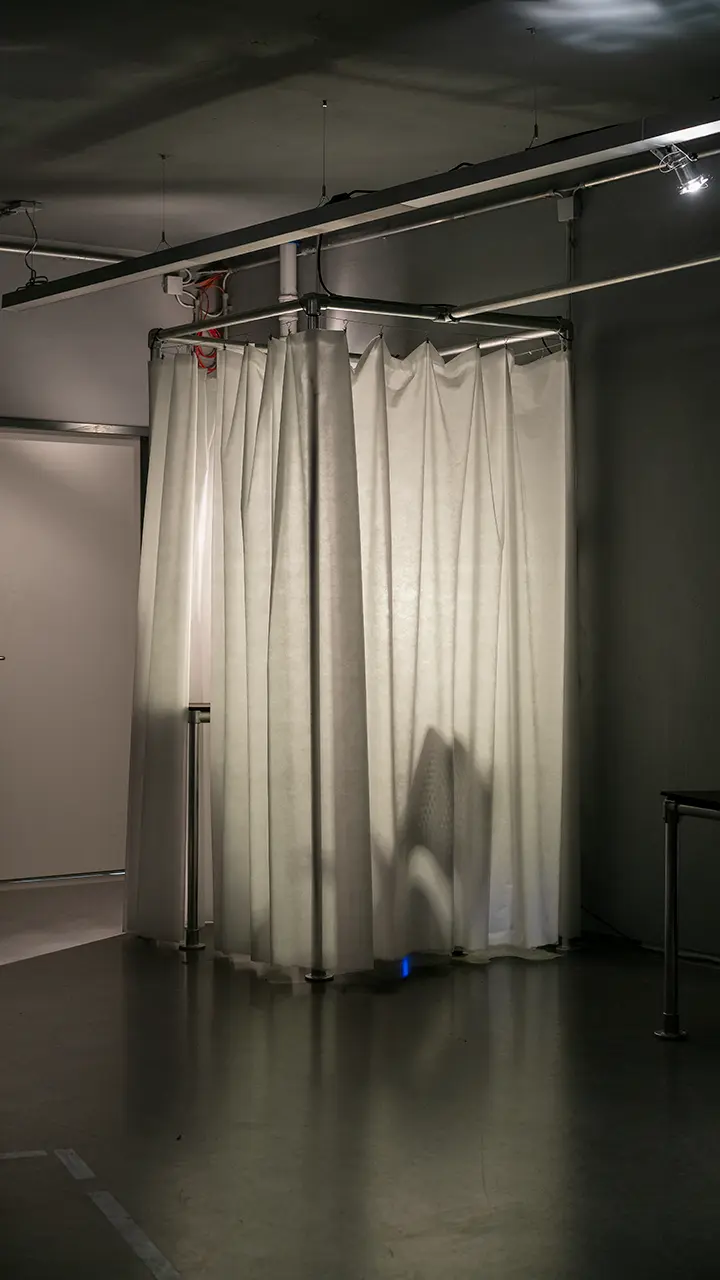

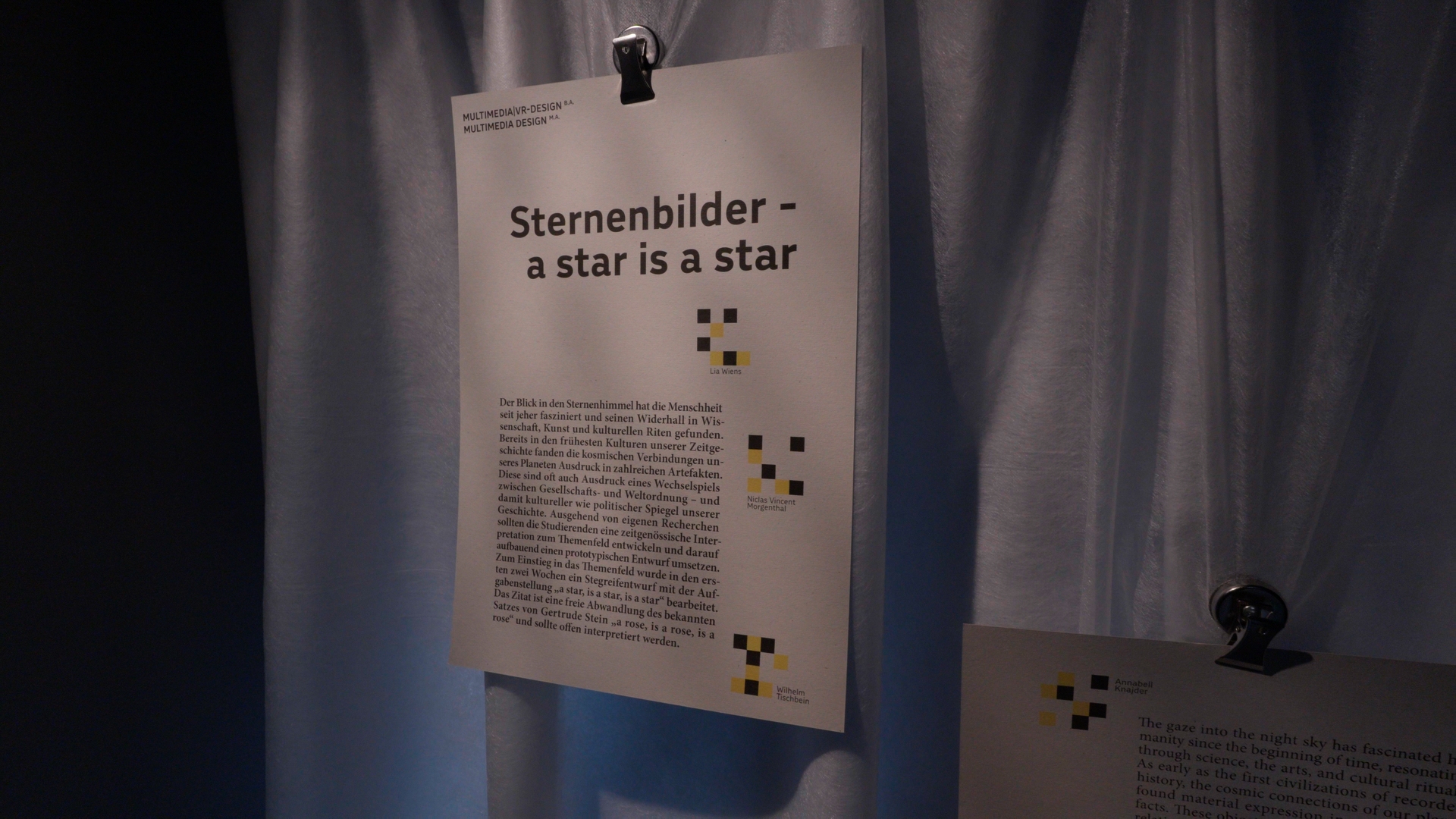

Action Unit __ was part of the project »Sternenbilder« at Burg Giebichenstein University of Art and Design.

Action Unit __

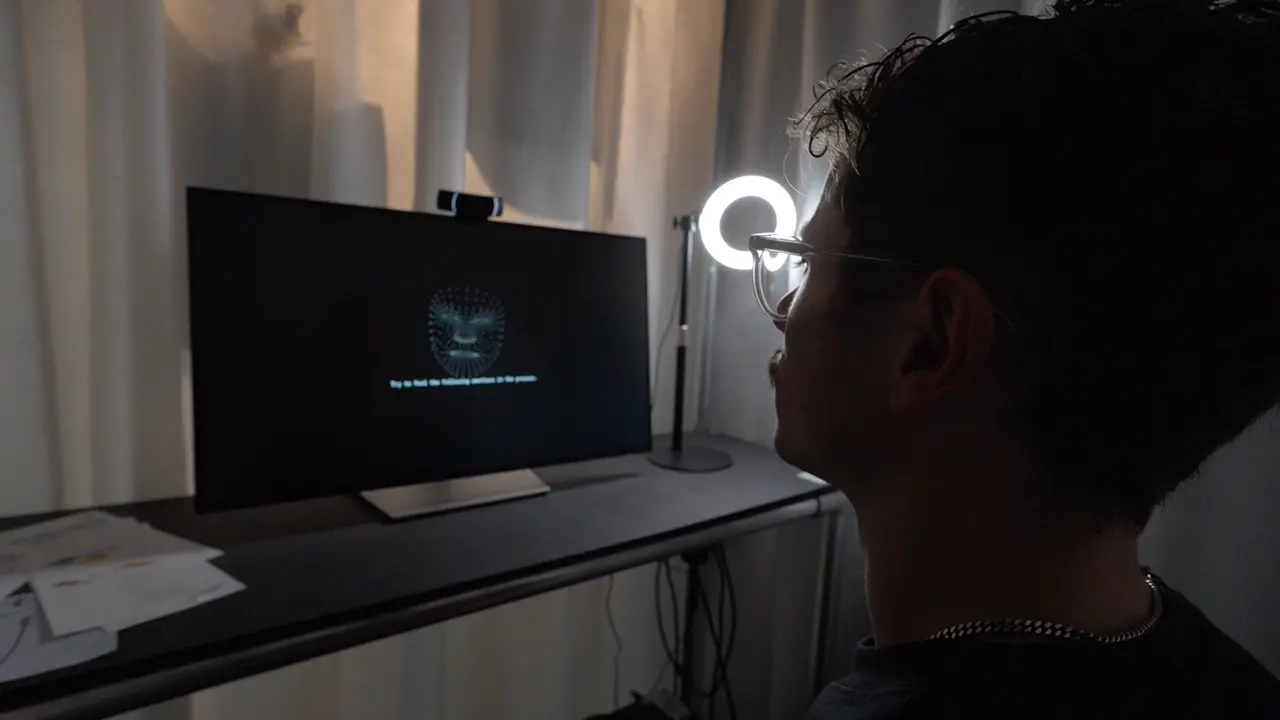

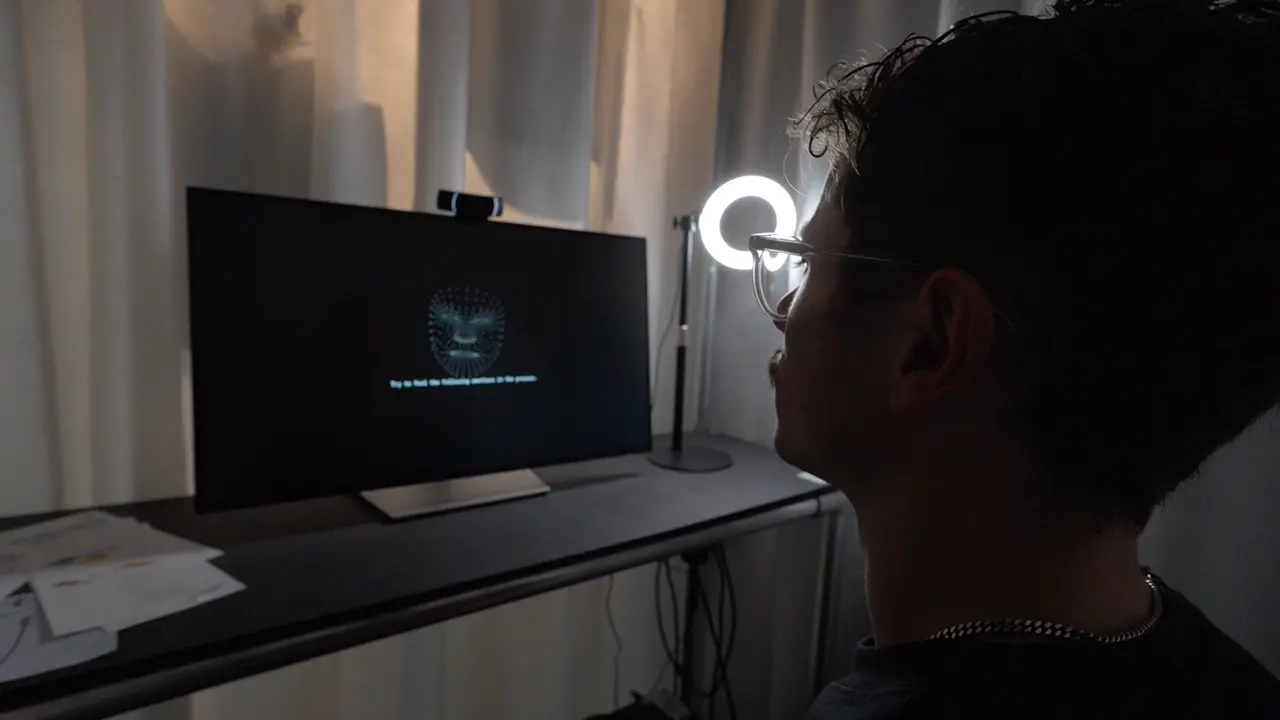

Action Unit __ is an interactive installation that confronts users with the controversial technology of AI-driven emotion recognition. It places participants in a direct, playful dialogue with an algorithm, challenging them to perform the seven universal basic emotions as defined by psychologist Paul Ekman. As users try to match their facial expressions to the system's rigid templates, the installation reveals the absurdities, biases, and profound limitations of a technology that claims to objectively decode our innermost feelings.

The project moves beyond a theoretical critique by creating a tangible, first-hand experience of being analyzed, judged, and categorized by a machine. By gamifying the act of emotional expression, it provokes critical questions: On what flawed scientific and cultural foundations are these systems built? What happens when we surrender the authority to interpret our own feelings to opaque algorithms? Action Unit __ serves as an experiential commentary on the precarious intersection of human complexity and artificial intelligence, urging a deeper conversation about a future where technology claims to know us better than we know ourselves.

The installation creates a seamless real-time feedback loop between the user and several interconnected AI systems, orchestrated within the visual programming environment VVVV Gamma. A webcam captures the participant's face, which is then tracked by Google's MediaPipe framework to isolate facial landmarks. This data is fed into Facebook's DeepFace library, which analyzes the facial expression and scores it against the seven basic emotions.

At the end of the interaction, all collected performance data is passed to a locally-run Large Language Model (Google's Gemma 3). The LLM generates a final, qualitative analysis, giving the system a voice to deliver its conclusive judgment on the user's "emotional conformity." This project was featured in the annual exhibition at the Burg Giebichenstein University of Art and Design, where it analyzed and judged numerous visitors.

Action Unit __

Action Unit __ is an interactive installation that confronts users with the controversial technology of AI-driven emotion recognition. It places participants in a direct, playful dialogue with an algorithm, challenging them to perform the seven universal basic emotions as defined by psychologist Paul Ekman. As users try to match their facial expressions to the system's rigid templates, the installation reveals the absurdities, biases, and profound limitations of a technology that claims to objectively decode our innermost feelings.

The project moves beyond a theoretical critique by creating a tangible, first-hand experience of being analyzed, judged, and categorized by a machine. By gamifying the act of emotional expression, it provokes critical questions: On what flawed scientific and cultural foundations are these systems built? What happens when we surrender the authority to interpret our own feelings to opaque algorithms? Action Unit __ serves as an experiential commentary on the precarious intersection of human complexity and artificial intelligence, urging a deeper conversation about a future where technology claims to know us better than we know ourselves.

The installation creates a seamless real-time feedback loop between the user and several interconnected AI systems, orchestrated within the visual programming environment VVVV Gamma. A webcam captures the participant's face, which is then tracked by Google's MediaPipe framework to isolate facial landmarks. This data is fed into Facebook's DeepFace library, which analyzes the facial expression and scores it against the seven basic emotions.

At the end of the interaction, all collected performance data is passed to a locally-run Large Language Model (Google's Gemma 3). The LLM generates a final, qualitative analysis, giving the system a voice to deliver its conclusive judgment on the user's "emotional conformity." This project was featured in the annual exhibition at the Burg Giebichenstein University of Art and Design, where it analyzed and judged numerous visitors.

Info

Supervisor: Prof. Dominik Schumacher

Software: VVVV Gamma

Python Runtime: Tebjan Halm

Action Unit __ was part of the project »Sternenbilder« at Burg Giebichenstein University of Art and Design.